Introduction to Disaster Recovery Insights: Managing Kubernetes, Longhorn, and Databases

If you're running a Kubernetes cluster, chances are you're well aware of the complexities that come with managing storage, workloads, and high availability. At Open200, we've invested in a robust architecture, leveraging k0s for Kubernetes, Longhorn for storage, and ArgoCD coupled with mostly Helm for deployment management. It's a stack designed for resilience, but as we found out, no system is foolproof.

When Hetzner Cloud, our cloud provider, experienced a brief but impactful outage, we were thrown into a scenario that tested the durability of our setup. Although Kubernetes' self-healing properties initially made it seem like a minor hiccup, the delayed aftershocks highlighted the devil in the details. Notably, issues with our databases and storage solutions required a deep dive to resolve.

This blog post serves as a field report, aimed at helping you understand some pitfalls and quirks that may not be immediately obvious. We'll walk you through the challenges we faced, the steps we took to resolve them, and the future-proofing measures now in place to better prepare us - and potentially you - for unexpected disruptions.

Cloud Outage Timeline: Learning from Kubernetes, Longhorn, and Database Failures

- Short Outage: Hetzner Cloud experienced a brief outage, taking two nodes offline briefly.

- Initial Recovery: Kubernetes seemed to self-heal, bringing apps back to life.

- Delayed Symptoms: 1-2 hours later, it became apparent that MongoDB and Postgres pods failed and were not able to self-heal.

- Insult to Injury: Ironically, both MongoDB and Postgres had replicas scheduled on the two nodes that went offline, despite having node antiaffinity enabled. This distributed them to the exact nodes that failed.

The Problem: PVC Reattachment, Disk Space, and Longhorn Quirks

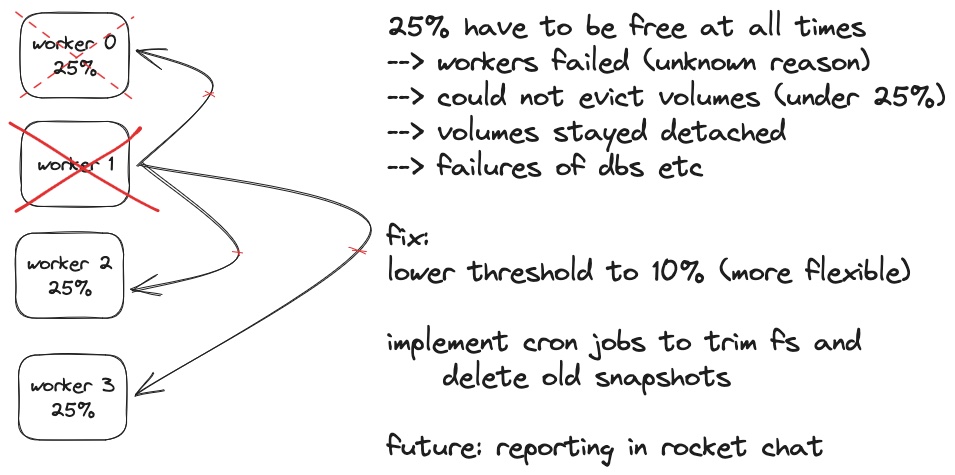

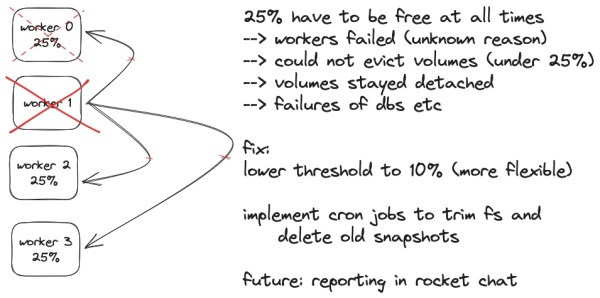

A known issue in Longhorn led to complications: Snapshots, although deleted via the Longhorn GUI or k9s, continue to occupy disk space. This created a cascade of problems due to Longhorn's default setting, configured through its Helm chart, requiring at least 25% of free storage space on each node (see my poorly drawn visualization of this below).

In more detail:

- The Persistent Volume Claims (PVCs) were clogged with these so-called "deleted" snapshots.

- This caused each PVC to hit its maximum specified limit of 50 GB.

- Attempts to drain the affected nodes were futile. The already bloated PVCs couldn't be moved to other nodes without violating Longhorn's 25% minimum free space rule.

Through Longhorn's GUI, we verified the storage constraints and observed that one of the nodes involved in the outage was still marked as "unschedulable" because of this, adding another layer to the problem.

The Fix: Adjusting Space Requirements and Draining

-

Lower Minimum Space Requirement: We updated the Longhorn setting for minimum free space from 25% to 10% via the Helm chart in ArgoCD.

-

Node Draining: We drained the problematic node using Longhorn's UI, effectively kicking off the resource reclamation process.

-

Verify: After this, we checked Longhorn again to confirm that the PVCs were reattached and the node was marked as schedulable, also the databases began to heal after the PVCs reattached.

Longhorn CronJobs , Cross-Namespace Limitations - Your Future Safeguards

Longhorn CronJobs for Improved Resource Management : To proactively manage storage resources and minimize the impact of the issues we faced, we implemented two cronjobs available in Longhorn version 1.5 and above.

Snapshot Cleanup: This cronjob is designed to remove snapshots that are older than one day. We've set this up because our snapshots are backed up to an S3 bucket via Velero. With off-cluster backups in place, there's no need for us to retain these older snapshots locally. This helps us free up valuable storage space on our nodes.

Filesystem Cleanup via FS-Trim: The second cronjob we implemented is an fs-trim operation. FS-Trim is used to reclaim unused blocks on the PVCs, thereby freeing up space that's not actively being used by any data. When snapshots get deleted, they leave behind unused blocks due to longhorns design ; this job ensures that those are cleaned up, helping to maintain an optimal storage state.Both of these cronjobs are configured through Longhorn's Custom Resource Definitions (CRDs) and are managed as part of our infrastructure-as-code (IaC) approach, although they can also be set directly via the Longhorn GUI for those who prefer a more manual approach.

Cross-Namespace Limitations: Currently, Longhorn's cronjobs only affect the Longhorn namespace. We raised a GitHub support ticket to request cross-namespace detection. For now, we've set up a Prometheus alert and occasionally run manual fs-trim operations through the Longhorn UI for services like Minio.

Conclusion for a resilient future

This experience taught us the intricacies of Longhorn's snapshot management and what sometimes makes disaster recovery such a complex process. We've put in place manual fixes and automated cronjobs, but there's still room for improvement, especially regarding cross-namespace storage management and the automation of that issue.

Edit 10/16/2023: We got a response to our support request on GitHub, and we can now enable automated trimming.

Sources: